Can The iPhone X’s True Depth Camera Be Used For Performance Capture?

The iPhone X’s True Depth camera system is the technology that enables Apple’s new augmented reality animoji, but vfx artists are discovering that it may have other uses, too.

Through Apple’s ARKit, developers and artists can gain access to the face meshes that are generated for animoji. Taiwanese vfx artist Elisha Hung fetched that data and wrote it into a custom file.

Then, using a Python SOP, he imported the data into Houdini, turning it into a more traditional editable 3D file.

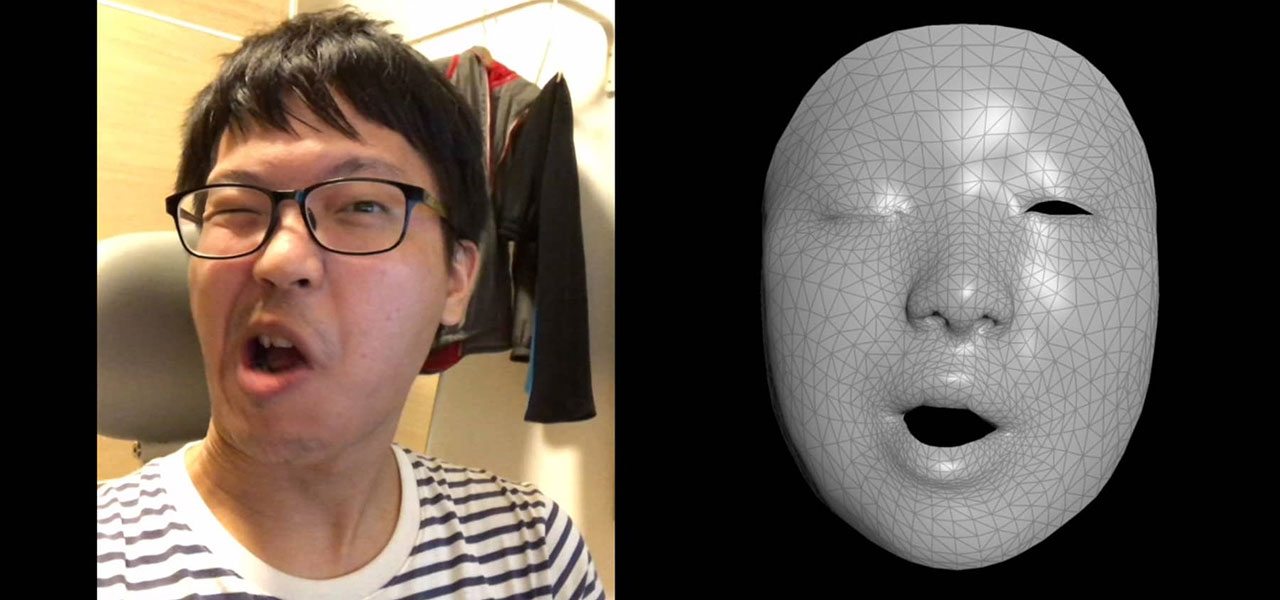

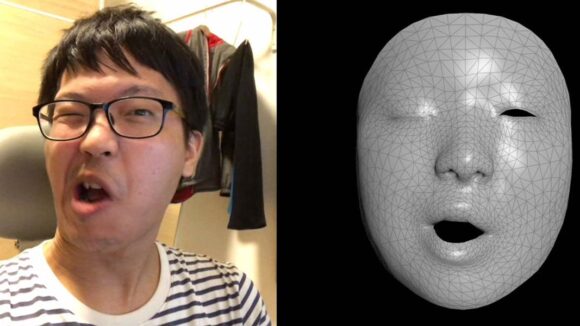

In this second test, Hung did a quick-and-dirty projection map of his face onto the Houdini file:

There are many things that are unclear about Hung’s process, and lots of people are asking questions in his video comments, however, the tantalizing potential revealed here is that the iPhone X could be used as a prosumer-level performance capture tool.

Obviously, no one is going to use the iPhone X to rival an Andy Serkis performance, but there’s plenty of innovation that could emerge from making performance capture available to the masses.

In fact, making performance capture cheaper and more widely available was a goal of Faceshift, the Swiss start-up that Apple acquired back in 2015 and whose technology has been developed further by Apple into animoji. It’s not too hard to draw a line from Faceshift’s activities a couple years ago and where Apple looks to be headed today:

.png)