Lumiere, Google’s New AI Model, Creates Fully-Animated Video From Text And Photo Prompts

Google Research has shared details about its video-generating AI model Lumiere, which signals advancement from the tech industry’s push to generate animation through artificial intelligence.

What does Lumiere do? Lumiere is a text-to-video diffusion model that lets users create stylized animated videos. Like image-generating and other video-generating models, Lumiere uses text and image prompts to generate the videos.

How does it work? Lumiere uses a new diffusion model called Space-Time-U-Net, or STUNet for short. The model tracks where images are in a frame and how they move and change over time. This process allows Lumiere to create a coherent video all at once that features cleaner movement than what was previously possible with other models.

How is Lumiere different from other AI video-generating models? Other programs, such as Runway, Stable Video Diffusion, or Meta’s Emu, patch videos together from individual still frames created by the model, limiting the amount of movement possible in a clip. Their process is similar to piecing together a flipbook one page at a time.

On the other hand, Lumiere creates a foundational frame from the input prompt and then uses its STUNet model to approximate where and how objects within that frame move around. Using the same flipbook analogy, Lumiere conceptualizes the entire book as a whole, allowing it to produce more frames of animation and smoother movement.

What else can Lumiere do? Users can suggest a style, through text or by providing a reference image, and Lumiere can generate a video with a similar aesthetic. Lumiere can also edit or fill in portions of existing videos. For example, the model can be used to change a person’s outfit in a clip without affecting the entire clip.

What are the drawbacks of something like Lumiere? On its Lumiere website, the Google Research team acknowledges some of the risks proposed by its software:

Our primary goal in this work is to enable novice users to generate visual content in a creative and flexible way. However, there is a risk of misuse for creating fake or harmful content with our technology, and we believe that it is crucial to develop and apply tools for detecting biases and malicious use cases in order to ensure safe and fair use.

Those risks seem particularly relevant this week, as X (formerly Twitter) suspended ‘Taylor Swift’ searches due to a flood of explicit AI-generated images of the singer.

Of course, artists will also be concerned about where Lumiere is collecting its training data and if the software could someday be used to replace human animators. The Google Research page doesn’t address either of those concerns.

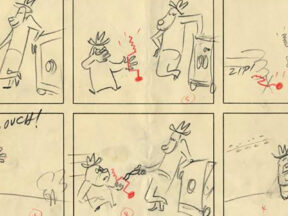

Pictured at top: A series of stills from Lumiere-generated videos.

Lumiere paper authors:

Omer Bar-Tal (Google Research, Weizmann Institute)

Hila Chefer (Google Research, Tel-Aviv University)

Omer Tov (Google Research)

Charles Herrmann (Google Research)

Roni Paiss (Google Research)

Shiran Zada (Google Research)

Ariel Ephrat (Google Research)

Junhwa Hur (Google Research)

Yuanzhen Li (Google Research)

Tomer Michaeli (Google Research, Technion)

Oliver Wang (Google Research)

Deqing Sun (Google Research)

Tali Dekel (Google Research, Weizmann Institute)

Inbar Mosseri (Google Research)

.png)