How ‘The Simpsons’ Used Adobe Character Animator To Create A Live Episode

When a live Simpsons segment was announced several weeks ago, many speculated about how it would be achieved. Would it be via motion capture? Perhaps a markerless facial animation set-up?

Ultimately, the three-minute segment, in which Homer (voiced by Dan Castellaneta) answered live questions submitted by fans, was realized with the help of still-in-development Adobe Character Animator controlling lip sync and keyboard-triggered animations adding to the mix. Cartoon Brew got all the tech details from The Simpsons producer and director David Silverman and Adobe’s senior principal scientist for Character Animator and co-creator of After Effects, David Simons.

But first, here’s the live segment:

The origins of a live Simpsons

The idea for a live-animated segment had been around for several years, according to Silverman, who noted that the idea was to take advantage of Castellaneta’s ad-libbing skills. “We all know that Dan is a great improv guy. He came from Second City in Chicago, where comedians like Bill Murray and John Belushi had also performed.” However, it was not so clear what technology could be used to produce a live broadcast. That is, until The Simpsons team observed how the Fox Sports on-air graphics division was implementing the live manipulation of its robot mascot, Cleatus. That led to an investigation of Adobe Character Animator.

Still a relatively new feature in After Effects CC, Character Animator is designed to animate layered 2D characters made in Photoshop CC or Illustrator CC by transferring real human actions into animated form. This can be via keystrokes, but the real drawcard of the tool is the translation via webcam of user facial expressions to a 2D character and user dialogue driving lip sync.

Facial animation was not used in the live Simpsons segment, but lip sync direct from Castellaneta’s performance was. The lip sync part works by analyzing the audio input and converting this into a series of phonemes. “If you take the word ‘map’,” explained Adobe’s David Simons, “each letter in the word would be an individual phoneme. The last step would be displaying what we’re calling ‘visemes’. In the ‘map’ example, the ‘m’ and ‘p’ phonemes can both be represented by the same viseme. We support a total of 11 visemes, but we recognize many more (60+) phonemes. In short, if you create mouth shapes in Photoshop or Illustrator and tag them appropriately in Character Animator, you can animate your mouth by simply talking into the microphone.”

Interestingly, when The Simpsons team were looking to adopt Character Animator for the live segment, the tool was at the time, and still is, in preview release form (currently Preview 4). But The Simpsons team were able to work with Fox Sports to produce a prototype Homer puppet in the software that convinced everyone that a live Simpsons segment would be possible. “To ensure that the Simpsons team was using a very stable product,” said Simons, “we created a new branch of Preview 4 called ‘Springfield’ with the version number starting at x847 because that’s the price Maggie rings up in the show’s intro. We knew that good lip sync would be a priority so a lot of work went into adjusting our lip sync algorithm so the end result would be broadcast quality.”

Making animation

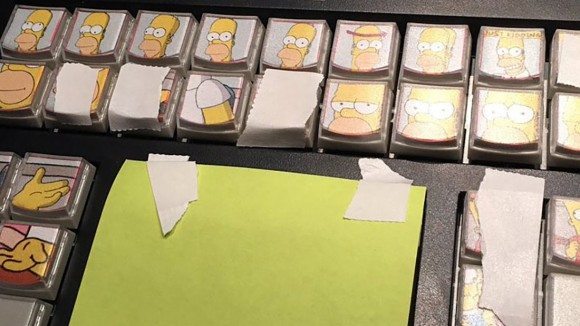

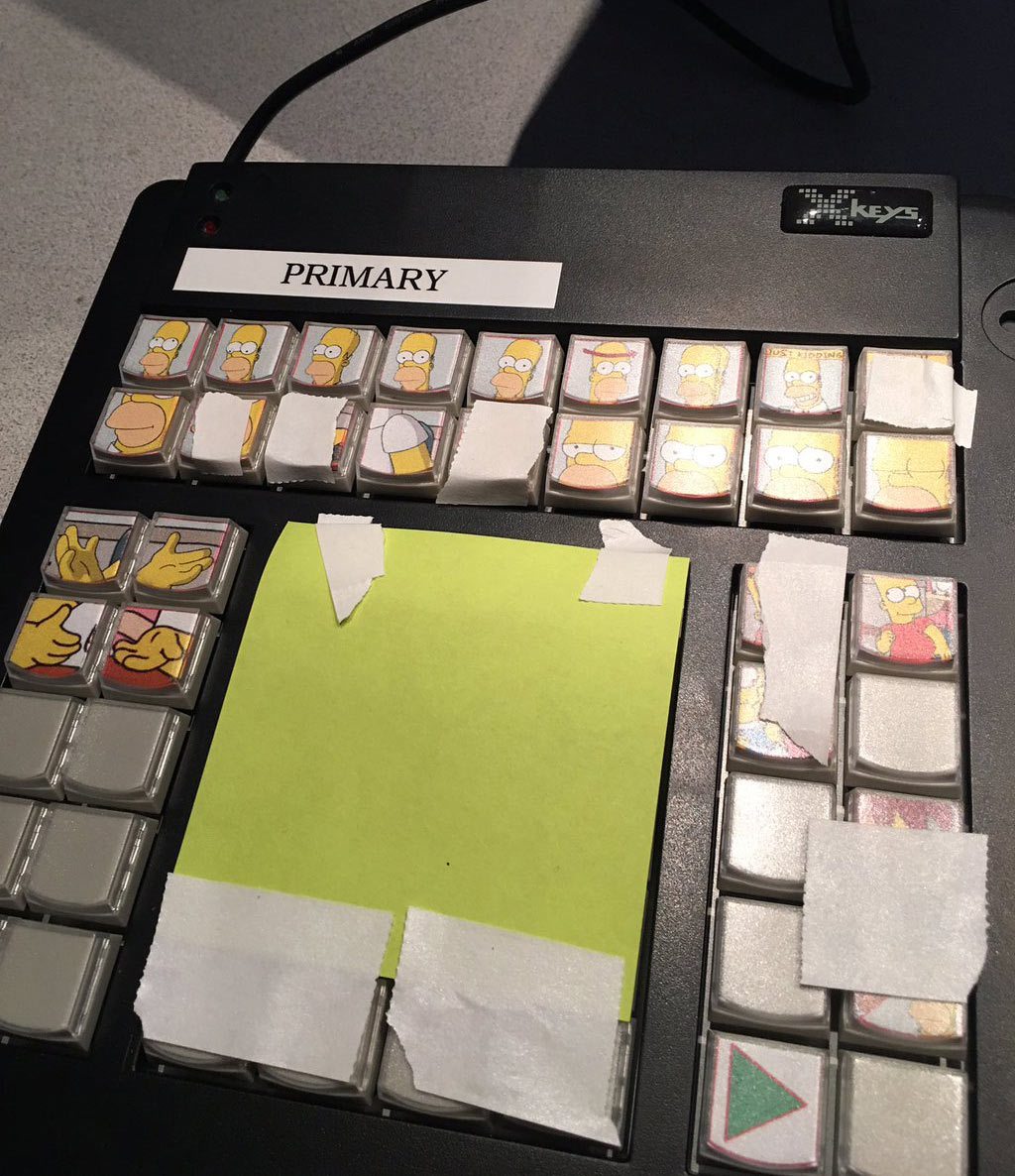

During the live segment – recorded twice for west and east coast viewers of the show – Castellaneta was situated in an isolated sound booth at the Fox Sports facility listening and responding to callers while Silverman was called upon to operate the extra animation with a custom XKEYS keypad device that included printed animated Homer thumbnail icons. Adobe also implemented a way to send the Character Animator output directly as a video signal via SDI and enable the live broadcast.

So, why was Silverman tasked with pressing the buttons? “They wanted me to work the animation because of my familiarity,” the director, who has worked on the show almost from day one, acknowledged. “I’m the guy who invented a lot of the rules for Homer [and] they always look to me as a Homer expert. So they thought it would be a good idea to have somebody who knew how the character sounded and worked.”

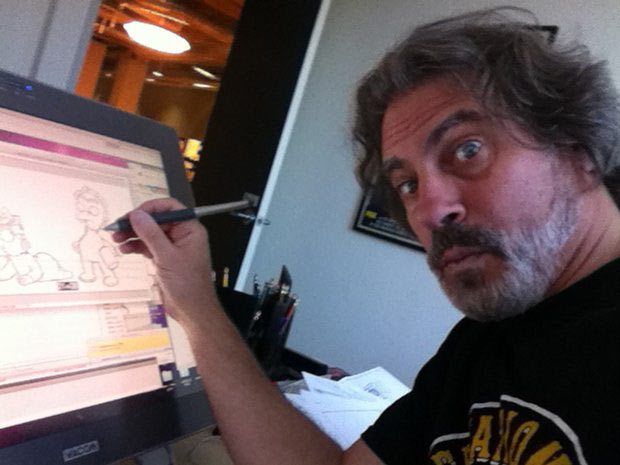

Of course, before the broadcast, the animatable pieces had to be assembled. This was done in Photoshop by The Simpsons animation team, then translated to Character Animator. “One of our animation directors, Eric Koenig, set up the animation stems that would be used,” said Silverman. “We had Homer speaking, all the dialogue mouths, the layout of the room and the animation of Homer raising his arms, turning side to side, eye blinks, et cetera. Eric Kurland then set up the set up the programming for it by working with Adobe on all the buttons and rigging of the character.”

A range of animations was developed but not necessarily used in the final live performance. “We had a D’oh! and a Woohoo!,” noted Silverman, “but because Dan was ad-libbing it seemed to me it was going to be unlikely he would be doing those catch phrases. And we had one button that had one very specific piece of animation when Homer said, ‘Just kidding’, because that was a pre-written part of the script where he said, ‘Just kidding, the Simpsons will never die.”

“Then there were the special animations where you press a button and, say, Lisa walks in,” added Silverman. “Originally I was pressing the buttons to cue all these people but in the end we had them come in at very specific points. Also, originally the cutting from a wide shot to close-up was being done by a different director, but then one of our producers suggested doing that automatically as you press the button. This was better to focus all my attention on Dan’s performance as Homer.”

Silverman rehearsed with the keypad set-up and with Dan, who was on a half-second delay, a couple of times before the broadcast. “People have been asking me what are the buttons that are covered up in the photo of the keypad I published. I just covered up the buttons I wasn’t going to use. There were a lot of all these buttons for the characters walking in but they were unnecessary because we had that on automatic. There were some other buttons that were on there that I just didn’t think I would use.”

Asked whether he was nervous during the live broadcast, Silverman said he “didn’t have any worries about it. Everyone else was more worried than I was! It might be because I am a part-time musician and have no worries being on stage. I have a good sense of timing from my musical performances, especially playing bass on the tuba which means keeping a steady beat. Dan and I have also known each other for decades now and I had a good sense of how he would approach it.”

The future of live animation

Clearly there are a host of tools available for live animation right now, from gaming engines to set-ups that enable real-time markerless facial animation such as that used in Character Animator.

Adobe’s Simons said more is being done in this area for the software. “Originally in Character Animator we only had the ability to control the head’s position, rotation, and scale using the camera. We then added the ability to look left, right, up, and down, assuming you have the artwork drawn to match. There’s a lot of room for innovation here. We could do clever things with parallax and who knows what user requests will come in. We do get a lot of questions on full body capture, depth cameras, and other input devices.”

Adobe is continuing to develop other features of Character Animator, too. The current Preview 4 includes improved rigging capabilities where previously that aspect had to be set-up in Illustrator or Photoshop. “We’ve added a feature called ‘tags’ which allows you to select a layer in Character Animator and tag it with an existing tag name,” said Simons. “We also have a new behavior called Motion Trigger. This behavior will trigger an animation based on the character’s movement. There are still some fundamental pieces we have to deliver such as an end-to-end workflow and further integration, such as with After Effects and Adobe Media Encoder. We’d also like to increase the interoperability of the products for people who want to do recorded animation.”

For his part on The Simpsons live segment, Silverman was pleased with the results. “There were a couple of head-turns that maybe included a smile that Homer wouldn’t normally do, but overall I think it was excellent,” he said. “Perhaps if I had had more practice I would have been a little more, animated, shall we say.”

.png)