Digital Humans In Real-time Are The Star In Neill Blomkamp’s Latest Short

When Cartoon Brew last checked in back in June with Neill Blomkamp’s experimental film outfit, Oats Studios, they had just released the short Rakka. Since then, several other short films and experimental pieces have come out of Oats, including ones created with the Unity game engine.

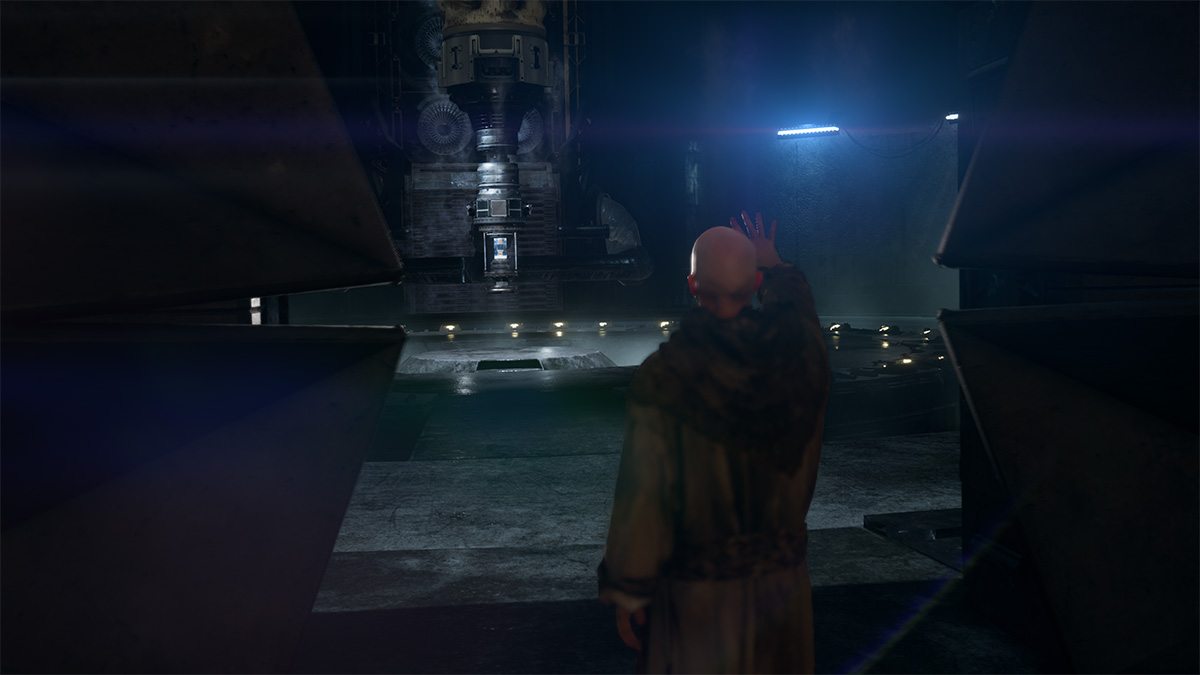

Oats’ most recent collaboration with Unity, a short called Adam: Episode 3, extends what Blomkamp’s team had already done with the real-time engine by now exploring digital humans for the two main characters.

At SIGGRAPH Asia in Bangkok, where Adam: Episode 3 was launched, Cartoon Brew caught up again with Oats visual effects supervisor Chris Harvey to find out what specific challenges were involved in rendering digital humans, in real-time.

Journey to real-time

To quickly recap, Blomkamp set up Oats Studios in Vancouver after already making a number of complex visual effects-heavy studio feature films (District 9, Elysium, and Chappie). Oats was designed to go back to the director’s roots in shorts and explore new ways of testing out content and connecting with audiences. The shorts were shared online, as were some direct assets from the productions.

Even though they were experimental, the films still followed a largely typical production life cycle, from pre-production through to post. CG and visual effects were important aspects of most of the shorts, which meant Oats had employed the use of motion capture, photogrammetry, intricate modeling and rigging, and various rendering techniques into its pipeline.

Then, at one point, Unity Technologies – the makers of the Unity game engine – approached Blomkamp with the idea of applying the experimental side of Oats to a short film rendered in real-time. Unity had already done that with a 2016 tech demo of its own, called Adam, which had effectively been released as a short film itself. Unity’s intention was to highlight the physically plausible qualities of the game engine and its capabilities as a filmmaking tool.

Oats took on the Adam assets, and developed additional ones, to make Adam: Episode 2 with Unity. “This was a whole new experience for us to try, and a learning curve as well,” said Harvey. “One of the big questions was, how will we adapt as a traditional filmmaking company to using something like Unity? Much to our joy and surprise, it was not nearly as painful as I was worried about. It was actually quite seamless to be able to move into something like this.”

Adam: Episode 2 was actually made largely like Oats’ other shorts. Assets were modeled, rigged, textured, and animated in traditional cg tools. Action was also captured via performance capture, including facial capture. Unity’s Timeline sequencing tool also enabled the management and editing together of scenes.

For Harvey, the benefit of working in real-time was obvious. The filmmakers could get relatively instant feedback on shots – remember, there’s no need for a renderfarm in real-time, which means many iterations could be made. “Literally, the film just existed live at all times,” he said. “Any time we added something new, it was there and all done, at any time. You could turn the camera around, you could move a light, you could add an object. Whatever you wanted to do, it was just always running, which was really, really cool.”

“At one point,” adds Harvey, “I was getting worried about a certain asset in this new film. I think it was [the female character’s] mask that was re-developed. We were changing the way it looked, because at one point, it covered more of her face and you couldn’t see her emotion. We wanted to remove it and make the whole thing glass. And I’m like, ‘We really need to get this in, because we’ve got to get it into the shots. We need to see what it starts looking like.’ I was thinking about how I used to do things, where you’d put it in the shots, and maybe a week later I’d get to see it. But here, a couple hours later, I was at a different artist’s desk, looking at where we were putting rocks, and her mask was in all of the shots! It was a head trip, because it was just like, ‘Oh, it’s there live all the time – always.’”

Adding digital humans to the mix

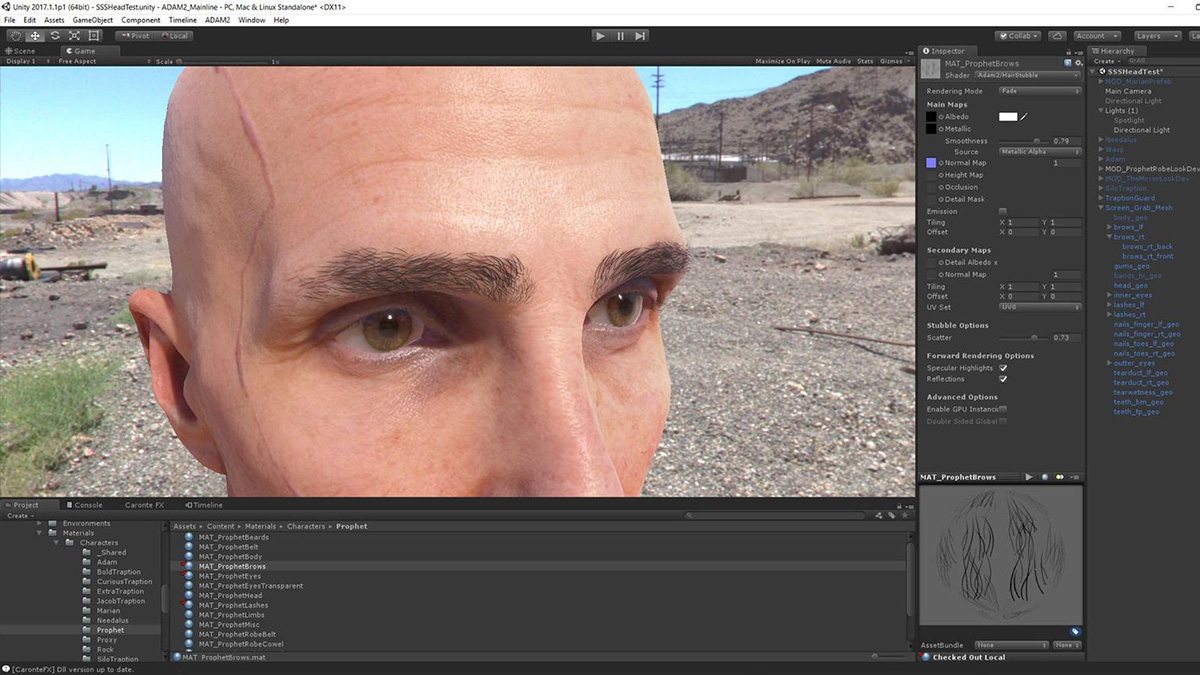

The production side of real-time was an obvious benefit to the filmmaking process for Oats. But could a real-time engine produce imagery that was high quality enough to enable believable, digital humans? Adam: Episode 3 set out to deliver exactly that. Animating the humans in the short was informed by body and facial capture, while the main approach to making them photoreal lay in scanning actors for reference and using Unity’s shaders for sub-surface scattering (the phenomenon in which light penetrates the surface of the skin, interacts with it, and then bounces out).

Sub-surface scattering in traditional offline renderers is key to some of the more believable digital humans made in big Hollywood productions. While it has certainly been tackled in some real-time projects previously, including with other game engines, this was one of the first times it had been attempted at such a large scale with Unity. Harvey says that, in some ways, Oats ignored the fact that they were using a game engine at all, and tried to solve the digital human problem as if they were making a typical film. For example, they utilized the Alembic format (common in many visual effects projects) for streaming cloth and facial animation.

“When we went into it,” said Harvey, “we didn’t look at what’s been done and say, ‘Well, how can we make it better?’ We came into it thinking, ‘Where would we want this to look if we were making it in a regular film?’ We’re not going to think about it like we’re restricted by a game engine; we’re going to try to make it look as good as we can make it look, just like we would with any other tool. And because of that, we’re going to try to approach it the same way.”

Still, there were limitations inherent in relying on a real-time engine to produce something so complicated. So, wanting to have the film be able to run at 30 frames per second in real-time, Oats did have to make some concessions. “We could’ve set it to render one frame a second, but we said we want to do 30 frames a second,” said Harvey. “By putting that artificial limit on ourselves, that, in turn, set the technical limits within the engine. Our faces could only be a certain resolution if we wanted them to stream at that speed. The cloth could only be a certain resolution. Even though we had the capability of making it higher resolution, we had a technical roadblock that it just couldn’t keep up at 30 frames per second.”

Accessible filmmaking

While Oats Studios might have the clout of someone like Blomkamp behind it and the expertise and experience of many cg and visual effects artists like Harvey, the real-time films they’ve now made also evidence the fact that a new kind of filmmaking is possible. Unity is free for beginners, students, and hobbyists, with Adam assets also available to download and use. The version of Unity used by Oats is the same one available to the world (in fact, Oats used an older version than the current release).

Harvey recalls Blomkamp himself being stunned at what filmmaking tools are now commonplace, compared to what he had when getting into the industry. “Neill’s talked a lot about this. He’s like, ‘Man, when I was trying to get into film, if only I had had these tools.’ You had to go buy a pretty expensive camera, and run around and film it. Now, you can just start making stuff. I think you’ll see a lot more people being able to tell stories, and those stories are going to be coming from all over the place.”

.png)