Apple’s Keyframer AI Lets Users Generate Animation And Edit The Results With Additional Text Prompts

Apple’s recent unveiling of Keyframer, an animation-generating AI model, has sparked curiosity and excitement among tech enthusiasts and causing trepidation among animators.

Last week, the company released a 31-page research report outlining details of a recent study titled “Keyframer: Empowering Animation Design Using Large Language Models.” The paper (available here as a PDF) explains how Keyframer is advancing generative AI capabilities and includes reactions and reflections from several study participants and the researchers who led the exercise.

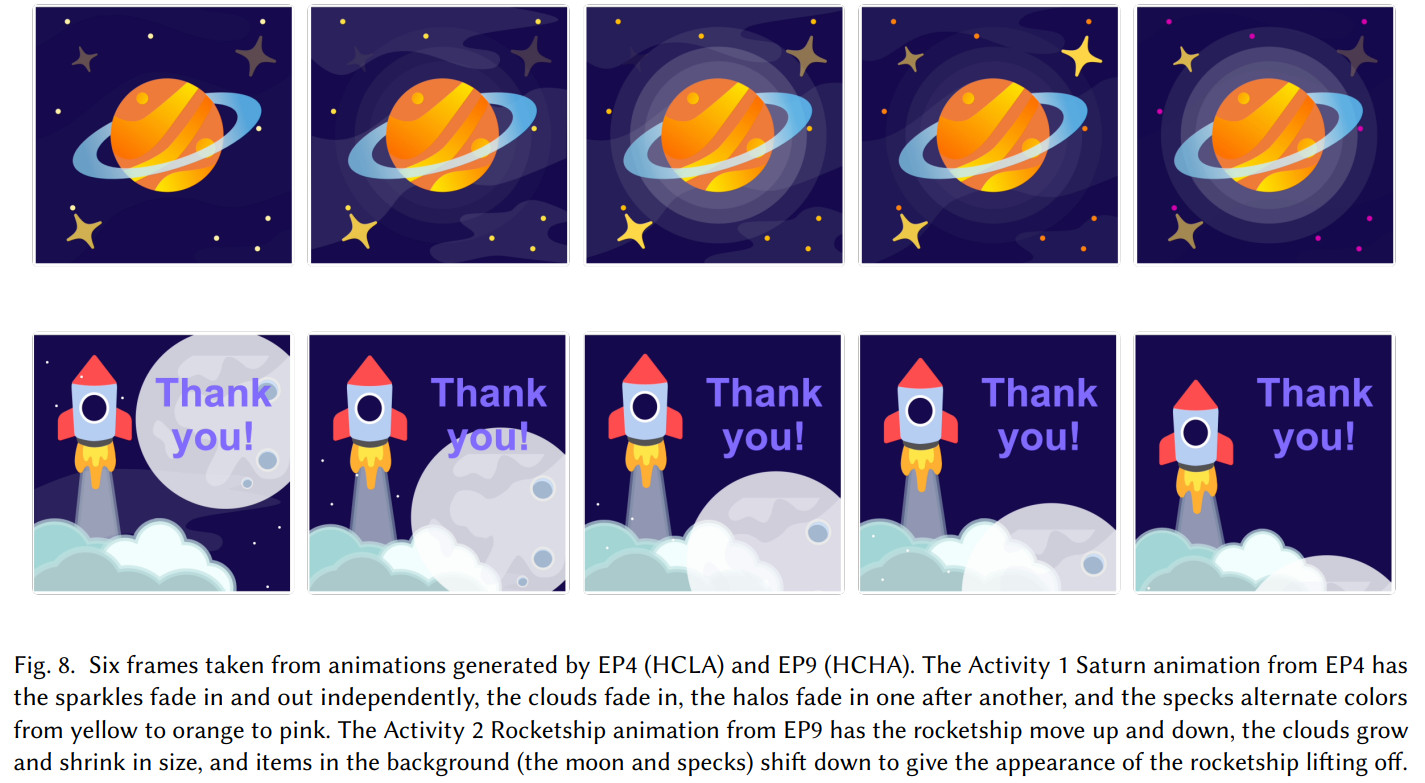

What is Keyframer? Apple’s new software is a large language model (LLM) that uses text prompts to add motion to still 2d images. Those results can then be edited by users through further prompts. Using OpenAI’s ChatGPT 4 as a base, the model lets users upload a Scalable Vector Graphics (SVG) file and explain in natural language what movement they’d like added to the image. The model does the rest of the work. Examples in the research paper include adding sparkling stars to a space scene and a rocket ship moving past the moon.

What are the limitations of the software? According to the paper’s writers Keyframer currently only focuses on web-based animation like loading screens, data visualization, and short transitions. Judging by the examples provided in the paper, Keyframer has a long way to go before it is capable of generating complex animation movement.

How is Keyframer different from other generative AI models? With many existing generative AI programs, once an image or short clip is generated, the final result is the final result. Users who want to edit the resulting image or clip must do so manually. Keyframer was designed to allow users to continue fine-tuning their results by inputting additional text prompts describing further changes, additions, or omissions they’d like to make. According to the study, “Direct editing along with prompting enables iteration beyond one-shot prompting interfaces common in generative tools today.”

Why is Apple working on this software? According to the company, “Large language models have the potential to impact a wide range of creative domains, but the application of LLMs to animation is under-explored and presents novel challenges such as how users might effectively describe motion in natural language.”

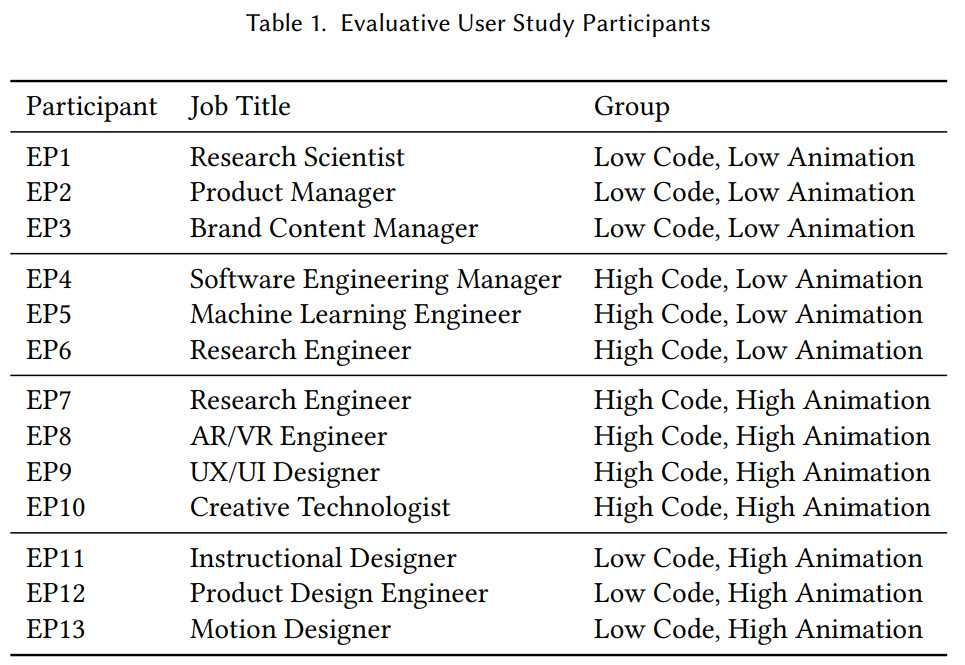

How was this study carried out? Apple invited 13 participants with a range of animation and programming experience (listed below) to test the software. During the study, participants were given 90 minutes to use Keyframer to animate two provided illustrations with full freedom to decide how they wanted to prompt the system and refine the results. Researchers observed the users to find out:

- What design strategies do users take to prompt LLMs for animations using natural language?

- How does Keyframer support iteration in animation design?

What were participants evaluating? According to the researchers, participants (who were compensated with “$12 cafeteria vouchers for their time”) were asked to consider, among other questions:

- Do you think you discovered any strategies for prompting that seemed to work better?

- Are there features you would want this tool to be able to do?

- Could you imagine using this type of technology for animation design in the future?

What did the participants think? The only motion designer among the participants was impressed by the tool but also expressed concerns about the impact that Keyframer could have on their career:

Part of me is kind of worried about these tools replacing jobs because the potential is so high. But I think learning about them and using them as an animator, it’s just another tool in our toolbox. It’s only going to improve our skills. It’s really exciting stuff.

A product manager who participated in the test said:

I think this was much faster than a lot of things I’ve done… I think doing something like this before would have just taken hours to do.

And a research engineer from the group explained:

This is just so magical because I have no hope of doing such animations manually… I would find [it] very difficult to even know where to start with getting it to do this without this tool.

What was the researchers’ take on the study? The paper’s writers came to the conclusion that “LLMs can shape future animation design tools by supporting iterative prototyping across exploration and refinement stages of the design process.” Researchers were particularly impressed with how Keyframer facilitates using “natural language input to empower users to generate animations.”

They further argue that the study “illustrated how we can enable animation creators to maintain creative control by providing pathways for iteration, with users alternating between prompting and editing generated animation code to refine their designs.”

Where can I try out Keyframer? Currently, Keyframer is only being used in-house at Apple, and the company hasn’t said if or when it will be available to the public.

Keyframer paper authors:

Tiffany Tseng, Apple

Ruijia Cheng, Apple

Jeffrey Nichols, Apple

.png)