Google’s Genie AI Model Creates Video Game-Like Interactive Environments From Text And Image Prompts

Google’s Deepmind team recently published a new research paper (available here as a pdf) that shows off what the group’s Genie AI model is capable of, and it could have significant implications for the future of video game development.

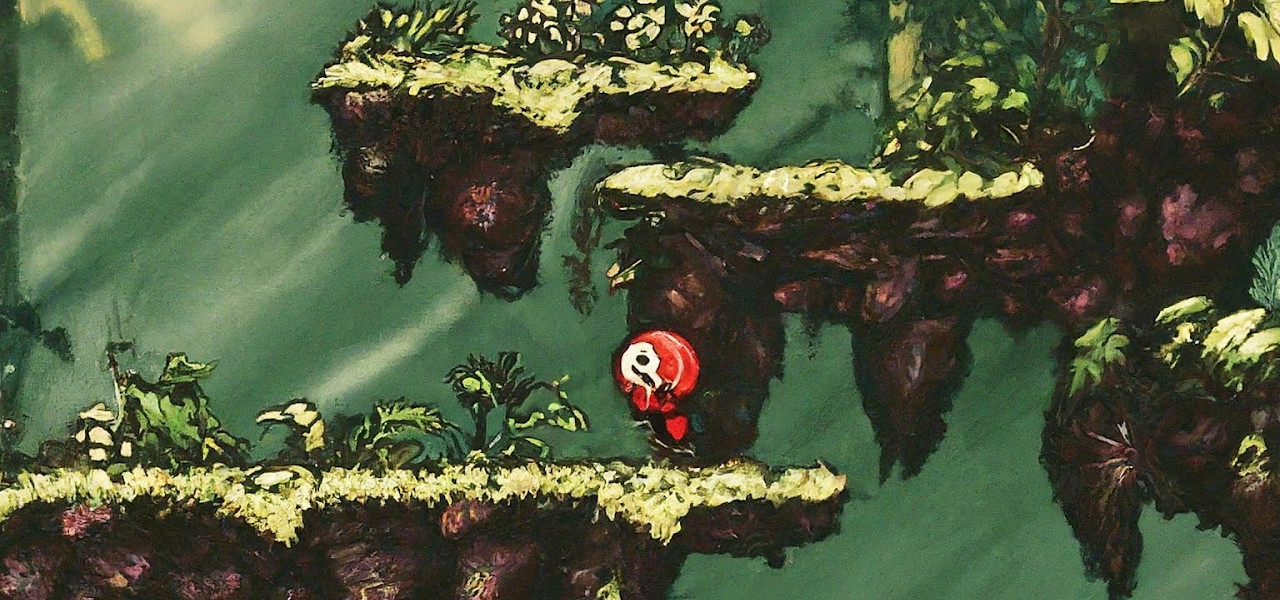

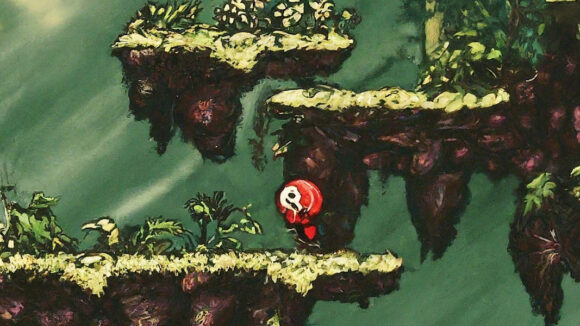

What is Genie? Genie, short for generative interactive environments, is an AI model that can generate action-controllable 2d worlds based on text or image prompts. Essentially, it can create crude 2d platformer-style video games. The model was trained on more than 200,000 hours of video gameplay to learn how to move characters in a consistent manner. This allows Genie to generate coherent frames in real-time based on the actions of the user/player.

How are the results? Early examples of Genie’s outputs are basic but impressive. It’s safe to say there is a long way to go before AI-generated games will be enjoyable for users. Currently, Genies’ games run at just one frame per second, compared to 30-60 frames per second for most console games and 100+ frames per second for many PC games. However, the model’s creators say there is no fundamental limitation that will prevent Genie from reaching 30 frames per second in the future.

What’s unique about this model? Genie’s creators refer to it and other similar models, like OpenAI’s Sora, as “world models.” A world model is a system that builds an internal representation of an environment and uses it to simulate future events within that environment. While Sora creates coherent and near-photorealistic videos, Genie’s results are interactive.

Is Genie the first model that can do this? Existing models, like Nvidia’s GameGAN, can produce interactive results like those of Genie. Until now, however, those other models have required input actions in the training process, such as pressing buttons on a controller, in addition to video footage. Genie was trained on video footage alone and learned eight possible actions that would cause a character to move on-screen.

What’s next for Genie? Genie was developed for in-house use only, and there are no current plans to release it to the public. That said, the Deepmind team did say its model could one day become a game-making tool:

Genie could enable a large amount of people to generate their own game-like experiences. This could be positive for those who wish to express their creativity in a new way, for example, children who could design and step into their own imagined worlds. We also recognize that with significant advances, it will be critical to explore the possibilities of using this technology to amplify existing human game generation and creativity—and empowering relevant industries to utilize Genie to enable their next generation of playable world development.

Author Contributions:

Jake Bruce: project leadership, video tokenizer research, action model research, dynamics model research, scaling, model demo, infrastructure

Michael Dennis: dynamics model research, scaling, metrics, model demo, infrastructure

Ashley Edwards: genie concept, project leadership, action model research, agent training, model demo

Edward Hughes: dynamics model research, infrastructure

Matthew Lai: dataset curation, infrastructure

Aditi Mavalankar: action model research, metrics, agent training

Jack Parker-Holder: genie concept, project leadership, dynamics model research, scaling, dataset curation

Yuge (Jimmy) Shi: video tokenizer research, dynamics model research, dataset curation, metrics

Richie Steigerwald: dataset curation, metrics

Partial Contributors and Advisors

Chris Apps: project management

Yusuf Aytar: technical advice

Sarah Bechtle: technical advice

Feryal Behbahani: strategic advice

Stephanie Chan: technical advice

Jeff Clune: technical advice, strategic advice

Lucy Gonzalez: project management

Nicolas Heess: strategic advice

Simon Osindero: technical advice

Sherjil Ozair: technical advice

Scott Reed: technical advice

Jingwei Zhang: technical advice

Konrad Zolna: scaling, technical advice

.png)