Disney And Other Researchers Are Developing A New Method For Automated Real-Time Lip Sync

Automated lip sync is not a new technology, but Disney Research, in tandem with a group of researchers at University of East Anglia (England), Caltech, and Carnegie Mellon University, have added a twist to it: deep learning.

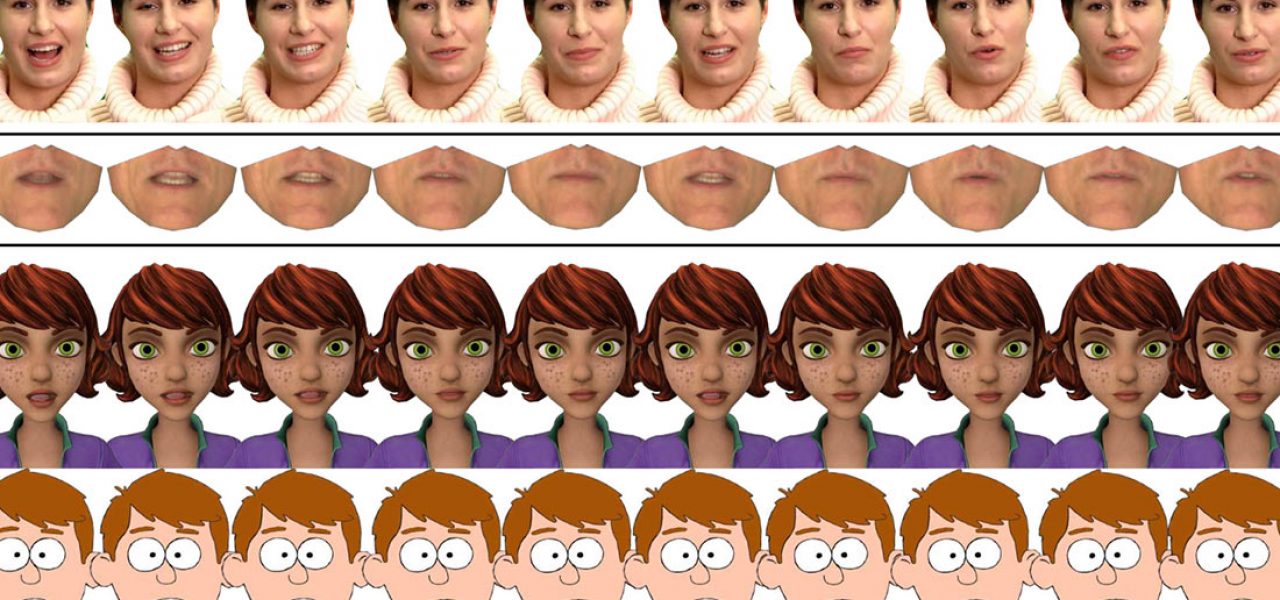

By training a neural network, the researchers are using a deep learning approach to generate real-time animated speech. In addition to automatically generating lip sync for English speaking actors, the new software can be applied to singing or adapted for foreign languages. The technology was presented at the most recent SIGGRAPH computer graphics conference in Los Angeles.

Realistic speech animation is essential for effective character animation,” said lead researcher Dr. Sarah Taylor, from UEA’s School of Computing Sciences. “Done badly, it can be distracting and lead to a box office flop. Doing it well however is both time consuming and costly as it has to be manually produced by a skilled animator. Our goal is to automatically generate production-quality animated speech for any style of character, given only audio speech as an input.”

The team of researchers designed a system that trains a computer to take spoken words from a voice actor, predict the mouth shape needed, and then animate the character’s lip sync.

The process requires both recorded audio, as well as eight-hours video reference of a single speaker reciting a collection of more than 2,500 phonetically diverse sentences, the latter of which is tracked to create a “reference face” animation model.

The audio is transcribed into speech sounds (phonemes) using off-the-shelf speech recognition software. Those phonemes are then applied to the reference face, and those results can be retargeted to any real-time cg character rig.

“Our automatic speech animation therefore works for any input speaker, for any style of speech, and can even work in other languages,” said Taylor. “Our results so far show that our approach achieves state-of-the-art performance in visual speech animation. The real beauty is that it is very straightforward to use, and easy to edit and stylize the animation using standard production editing software.”

While it would be easy to scoff at the quality of the technology based on these early examples, it’s not difficult to envision how in 10 to 20 years, automated lip sync could form the foundation of most computer-generated characters. In its current state, real-time lip sync could serve a valuable role for gaming applications, location-based animation projects, and tv series that require large volumes of modestly-budgeted animation.

The Walt Disney Company is not the only entity exploring automated speech and facial animation technologies. An entire track was devoted to the topic at the most recent SIGGRAPH, with multiple technical papers presented on latest developments.

The paper about this project – “A deep learning approach for generalized speech animation” – can be downloaded here.

.png)