SIGGRAPH 2017 Preview: Real-Time Projects in Animation, VR, and Gaming

Over the years, the SIGGRAPH conference has had to adapt to changes in computer graphics and interactive technologies. One ‘track’ introduced in 2009 was Real-Time Live, designed to showcase developments in real-time graphics in games, rendering, and interactive simulations – but even this part of SIGGRAPH has had to evolve since its inception.

Real-Time Live – which takes place in a fun flurry of evening demos – now encompasses all manner of presentations, from animation tools to game engines, vr and ar editors, and real-time human-like avatars.

With more and more animation and visual effects being done within a real-time paradigm, Cartoon Brew checks out a Real-Time Live project that allows for the interactive watercolor stylization of animated 3D scenes, and previews the other technologies that will be demonstrated at SIGGRAPH.

The idea behind Real-Time Live

Some sessions at SIGGRAPH are talks, some are demonstrations of new software and hardware. Real-Time Live is a bit of both – it’s a collection of presentations all done in a one and three-quarter hour block, with the highlight being the actual real-time demos of the techniques.

An industry-based jury selects the projects that make it to SIGGRAPH. The criteria is based on whether the project can demonstrate graphics being rendered in real-time and that it showcases an innovative approach in using real-time technology.

“The jurors assessment and professional opinion contribute in the final selection process for a project to be accepted into the program,” Real-Time Live chair Cristobal Cheng told Cartoon Brew. “But it also comes down to the project’s innate ability to draw in and captivate an audience looking to see the technology behind their real-time capability.”

Interactive 3D watercolors

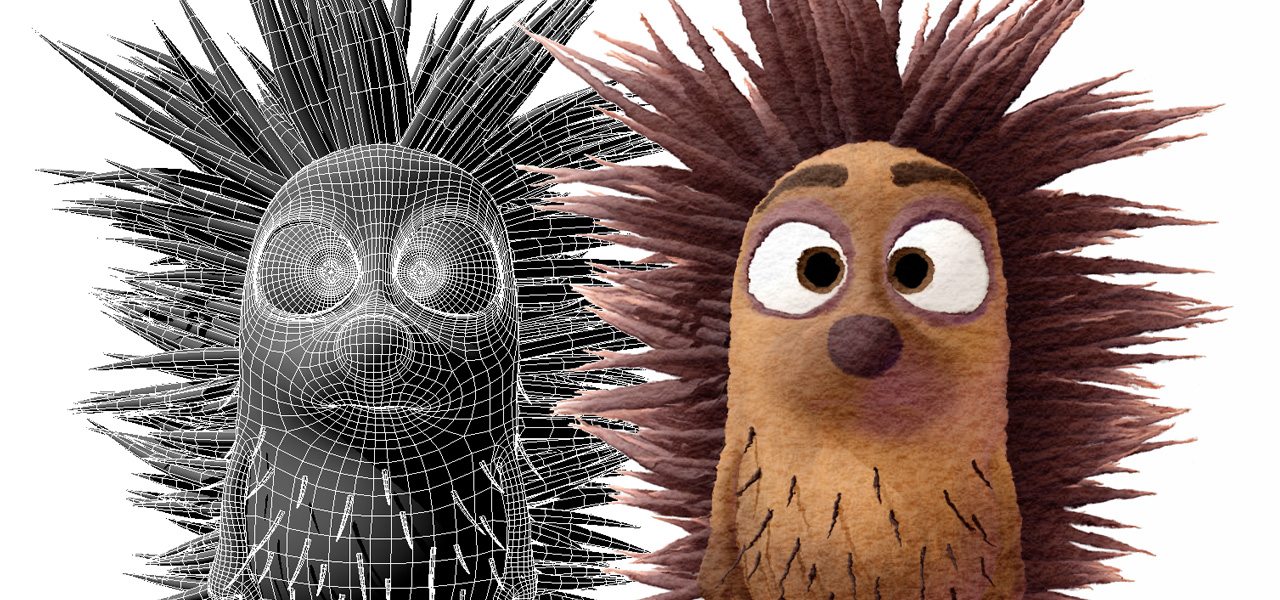

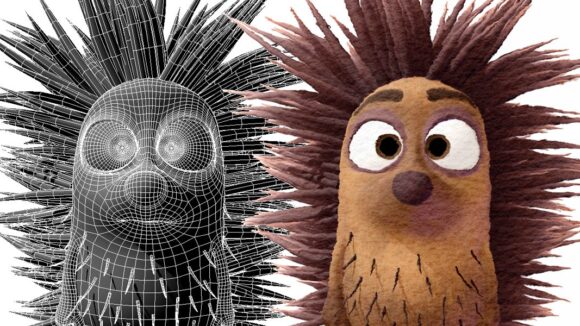

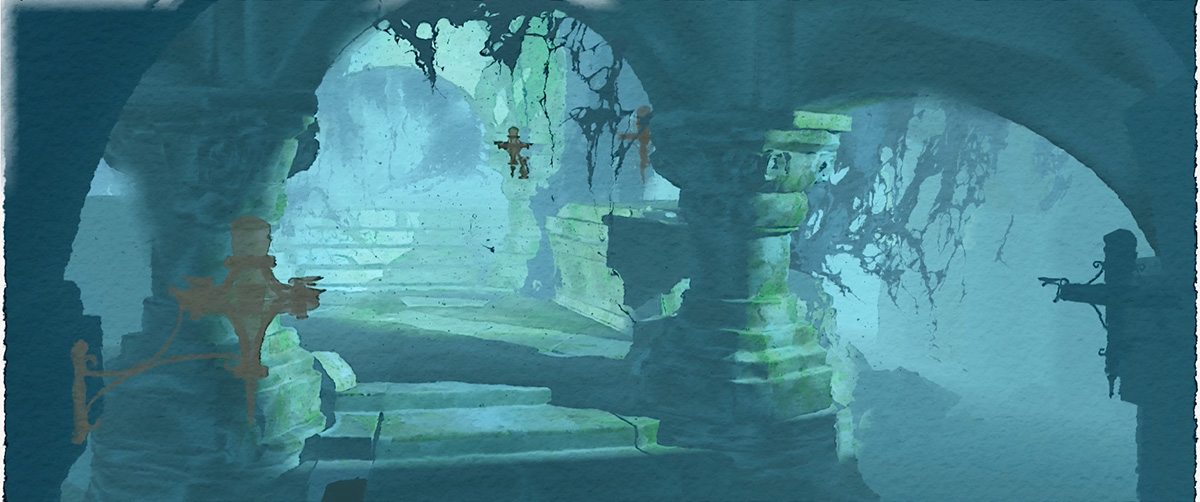

One of the Real-Time Live projects is called ‘Direct 3D Stylization Pipelines’ (pictured at top) and will showcase a system that allows artists to ‘art-direct’ a 3d scene by painting directly on a 3d object to introduce watercolor-like results. It comes out of research being led by Santiago Montesdeoca at Nanyang Technological University in Singapore, where he is undertaking a PhD in this area.

“Watercolor paintings present many localized effects, such as color bleeding and dry-brush, which need to be art-directed in a 3d (object-space) emulation,” said Montesdeoca. “To better achieve this, we needed a quick and effective way to interact with local stylization parameters in object-space and see the results in real-time.”

Why is real-time important in doing something like this? Montesdeoca’s idea is to replicate the same thing that happens with painting involving traditional media (which is, of course, in real-time) by incorporating the randomness that occurs when pigments, water, and paper interact. The system is “a simple real-time renderer with stylization control buffers and post-processing and compositing stages,” said Montesdeoca. Currently, it is implemented in Autodesk Maya (it’s being called the Maya Non-photorealistic Rendering Framework) and has been tried out by scores of artists – the plan is to open source the toolset in the near future.

Some Cartoon Brew readers may recall our piece last year on different 3d object stylization research called StyLit. Montesdeoca is familiar with that work, noting that both projects pertain to the field of ‘expressive rendering’ and both are aimed at emulating traditionally drawn or painted imagery.

The difference, says Montesdeoca, is in the source of stylization and the use of scene lighting. “Ours is a direct stylization approach, which doesn’t require a painted source image, but needs a carefully orchestrated and optimized rendering pipeline,” said Montesdeoca. “This allows us to attain localized art-direction and still retain real-time performance (30-60 plus fps). Our approach is not constrained by the lighting in the scene, performs quite well with scene complexity, and supports core mainstream shading elements such as texture mapping.”

Ultimately, the Direct 3D Stylization Pipelines research is aimed at finding a place in animation. Montesdeoca hopes it could be used to diversify from the “usual cg rendered look commonly found nowadays.”

“Especially in animated feature films, it is necessary to have stylistic variety to aid telling a wider range of stories and let studios differentiate themselves from the rest,” Montesdeoca said. “By retaining the real-time performance, I can also see our technology and the use of direct stylization pipelines in games and vr in the future. Just imagine being immersed in a watercolor world.”

What else to expect at Real-Time Live

The Mill’s use of its Blackbird tracking car, Cyclops production kit, and Unreal Engine from Epic Games in the short film The Human Race is one of the other drawcard projects in the Real-Time Live! line-up. This set-up effectively allows the shooting of car commercials (and other things) in which the filmmakers and clients can see the car inserted – and changed – in real-time. This has applications for consumers, too, who can ‘choose’ their desired vehicle and its specs.

Real-time simulation demos at Real-Time Live include an Nvidia project on simulating a large-scale ocean scene, complete with boats and floating bodies – usually an impossibly hard task to do interactively. Another project from ETH Zürich and Disney Research will demo real-time fluid simulation.

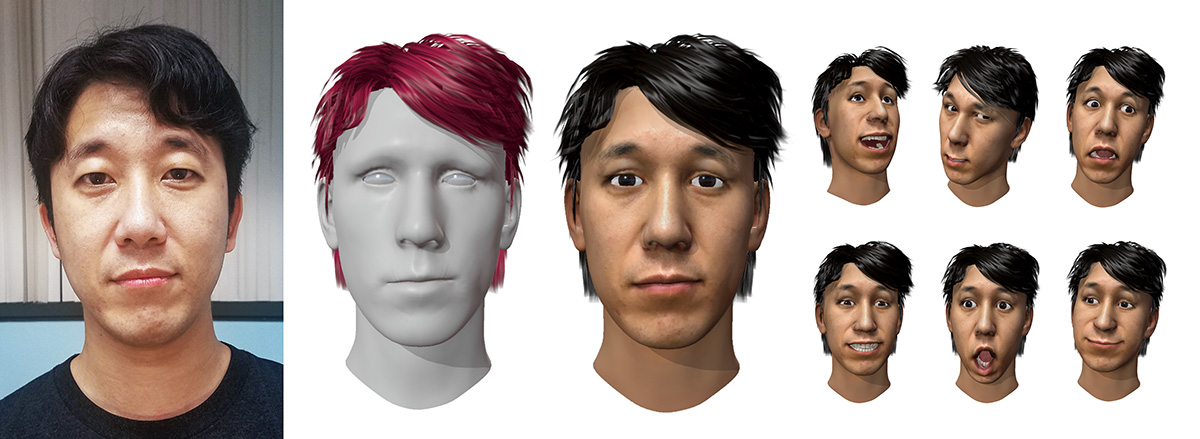

Meanwhile, the progam also offers a place to see technology that has so far remained relatively secret or at least unseen by many. Few people have been able to garner what digital avatar company Pinscreen has been up to. But at SIGGRAPH they will be demonstrating the process for creating a completely 3d avatar from just one image of a person’s face, which can then be animated in real-time via ‘performance capture’ on a webcam.

Former Pixar artist Tom Sanocki’s Limitless VR Creative Environment, which Cartoon Brew previously covered, will be demo’d. Another vr-related tool on show will be Unity’s EditorVR. It allows game designers to directly edit their Unity scenes in vr. Penrose Studios is also showing its Penrose Maestro, a tool that lets artists collaborate on scenes in vr.

Meanwhile, Unigine, a vr software platform, is presenting its ray tracing techniques for interactive environments. And rounding out the real-time vr-related projects at Real-Time Live is a look at Star Wars Battlefront VR from Criterion Games, which utilized the Frostbite game engine.

Real-Time Live takes place on Tuesday, August 1st from 6-7:45pm in South Hall K.

.png)